How to manage AI Context for maximum output efficiency

Using multiple model instances (chats) to achieve the maximum output quality

Maximum quality and efficiency are relative terms. At the current state of technology, no matter how you slice and dice it, you will not achieve 100%. There have been times where I wanted to grab Gemini and throw it into the wall. I have yelled at ChatGPT before and became so frustrated that I stopped and to the gym.

If you are mad at AI, it’s output and it’s run arounds you are not alone. I do this professionally and it can get to me. This article will show you how you can achieve the highest amount of output quality within the possiblities your plan allows.

The Executive Summary

The best performing AI Models & Agents are specialized

Achieving a high levels of efficiency requires a focused context

If you are trying to achieve too many overall goals in one model instance (chat) your context grows too high

Seperate your tasks to different Models that work well together.

A Brief Overview Of My Credentials

As many of you likely know my company Saiwala Consulting is a fractional CTO service company. I provide CTO services to businesses who otherwise would not have the know-how or the budget to have their own tech team. I have been doing this since 2015.

Some of my projects require no code solutions, some low code solutions and some require a full on development team. One of my companies that I cofounded is Knowetic.ai. Basically what we built is our own AI Agent that helps Behavior Analysts in the autism healthcare space make better decisions.

When our customers chat with our AI all they know is that they are talking to KnowYeti. What they do not know is that in reality they are talking to one model that interacts with other models, which interact together in a system.

Each individual instance of a model has a specific task. When the task is completed it passes the output of the task on to another model that does something else with it, which then passes it on to another until at some point a final model formats the output.

Think of this like a old school ford style assembly line of AI models doing a specifc task until one finally puts it all together, hands it to the sales person (the model our custom has been chatting with) and then the final output is given back to the customer.

The best performing AI Models & Agents are specialized

In the article: “Best practices for building agentic AI systems” Shayan Taslim breaks it down as follows:

After studying OpenCode and other systems, I’ve found three ways to specialize agents that make sense:

By capability: Research agents find stuff. Analysis agents process it. Creative agents generate content. Validation agents check quality.

By domain: Legal agents understand contracts. Financial agents handle numbers. Technical agents read code.

By model: Fast agents use Haiku for quick responses. Deep agents use Opus for complex reasoning. Multimodal agents handle images.

Don’t over-specialize. I started with 15 different agent types but now I have 6, each doing one thing really well.

Basically what this means is do not let a research agent do math. Do not let a creative agent do research. Do not let a legal agent crunch financial numbers. Give one instance of a model a specific task and then hand the output to another in case you need a system.

You can mimick the exact same behavior in the front end UIs of ChatGPT, Claude and Gemini. You do not need to know how to code.

The first thing you need to do is evaluate each task you want AI to complete for you from a lense of: “How many different capabilities and domains will this require?“ In other words you need to think through your goal a little before you just start prompting.

Anytime you have a Google type question you do not need to think, just ask. But when you need the AI create a more complex output it is very likely that at the current state of technology you will likely require at least two instances.

For example if you use AI to support your creative writing and you also generate image content with it you should use two different models (chat instances). Your one chat will always be used to build out writing for you. When the output is completed you feed a condensed version to the chat that creates the images for you.

Of course the type of writing will determine if you need two models for that task and then have a third model that writes it into a cohesive text for you. For example one model writes content, the other model does financial modeling and the third model builds it into a great sounding article based on your tone of voice, style etc.

The next advantage you automatically inherhit from this system is that each model (chat instance) has a specialized context.

Achieving a high levels of efficiency requires a focused context

AI performs at its best when it has a clear objective and a focus context. In essence this means do not mix tasks together and provide it with context important only for the task you are trying to accomplish.

By keeping all your tasks in one model (one chat window) you are continously expanding upon it’s knowledge. This will make your output a bit better every time. Now, depending on the plan you have (Free, Pro, Plus, Ultra etc.) the context can get too large. We will discuss what to do here later.

AI requires context to be able to perform your task properly. So it is important to give it as much information as possible pertaining to your task. I think many front end users have not yet grasped this completely. They understand the concept, but lack in execution. This again is why keeping one specific chat for one specific task is such a great way to achieve this.

If you are trying to achieve too many overall goals in one model instance (chat) your context grows too high

I just mentioned that at some point in time even a focused chat window will run into context issues. This is due to the amount of compute that is allocated to your chat. The less you pay, the less you have basically. So keeping your context to the point is important.

If you use one chat for multiple tasks you not only add to the overall context, but you’re also forcing the model to reiterate over different topics within it’s training data, or search the internet for more topics. This adds to your context window and sort of dilutes your AIs focus.

This is perfectly relatable though. You would do the same thing if you were asked to multi task constantly through different problem sets. It would likely be stressful, annoying or outright now possible. Your performance would decrease overtime. The same thing happens with the AI.

Seperate your tasks to different Models that work well together.

So the solution is to have dedicated chats for specific tasks. Sub-divide these into further specialized tasks if needed. Organize your chats into folders. However you feel comfortable organizing it. I have a chrome extension for Gemini which allows me to create folders and sub-folders. I have one called Health, Investing, one for each of my clients.

Now for more complex tasks that require several models tell the AI that it is one model in a sequence and that the final output should be written for another instance which will format the output, or calculate something or whatever it is. This is the starty of a system.

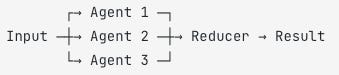

In the example above from Shayan Taslim you have a horizontal flow of information. Agent 1 (chat 1) for example writes you ideas for an article, agent 2 (chat 2) builds them into content and agent 3 (chat 3) generates images for you. The chat 4 (in this case the reducer) takes all of it and makes it into a cohesive post.

Now in development world the instructions are coded into the agent and it happens automatically. You can achieve the same thing by copying the output and pasting it into the next chat. Just instruct the AI to always generate it as an LLM formatted output so it is a prompt as well as the content you need.

The diagram above is just an example flow. This can take on any kind of complexity depending on your goal. Just think through your goals properly, divide them into tasks and then decide how to best handle it.

Things You Should Always Tell your AI To Do In Every Chat

There are some instructions I find highly useful, especially in a front end chat window context. Your AI is quite smart, but without proper guidance it can also be quite annoying. Here is a list of useful tips to always have your AI do.

Instruct your AI to keep track of ABC

As you work on your tasks make sure your AI knows to keep track of the important things. I for example always have the AI manage a running Checklist of all tasks with a lamens explanation of what this task is about. I use this for two purposes.

I alwasy have a quick way to reference the entire project in a checklist format.

I can quickly have the AI write me an answer or a report for my clients.

Goodbye Asana, Trello.

Tell your AI to remember your agentic system

I mentioned this above already, but make sure your AI knows which link in the chain it is in. I cannot stress how much better that makes your final output. Context is king. Provide it for everything that is relevant.

Tell your AI what type of tone / style of communication you want

I find this an incredible way to interact with AI. I like to the point, short answers. Sometimes I need an explanation. My AI and I have an understanding about how we communicate. I have several modes that I can activate just by telling it to:

Default mode (short, to the point, factual)

Humor mode (interject some humor, to break up the feel of the work day)

Teacher mode (when I activate this the AI automatically goes into more long form explanations of codes, setups or software. This is quite useful). Now with guided learning or study mode, this is on steroids.

You can even create a mode for each of your chats. It all depends on how you want to handle it. Obviously on a free plan, your memory context is quite limited so this may become more difficult to manage.

What To Do When Your Context Gets Full

Ever seen ChatGPTs UI lag and glitch? Ever had Gemini answer old questions you asked 20 minutes ago even though you asked something completely different? Ever felt like the AI is looping back to the same wrong answers over and over again?

Your context just got too large. The allocated compute power has run out. This makes perfect sense. It’s like asking you to do your most complex math on no sleep and no food. You need to transition.

This is where it comes in very handy if you told your AI to keep track of the most important points of the chat in case you ever needed to hand it off to another chat. Well that time has now come. So ask it to write you a transition report that another LLM can pick up quickly.

The smaller the project the easier this is of course. If you have hours upon hours of chat the report will not get you 100% there. This is why the report should always conclude with a prompt: “Since you are taking over this project, please ask me as many questions as needed so you have a comprehensive context. Is anything unclear? Anything you need to know?“

For some of the best articles on how to prompt effectively you can check out Wyndo.

I hope that this helps you think about context and tasks differently. I hope this content inspired you to take a different approach with your AI. And of course, I hope this actually helped you.